I wrote an ChatGPT plugin that tells me when to leave for the next tram

Step-by-step description of how I wrote my first ChatGPT plugin, my experiences and what problems I encountered

Have you tried using OpenAI to solve a complicated mathematical operation? Or receive information when the next tram is to take you to the city center? Or find out what was the result of the final match of your favorite team? If so, you probably got an unsatisfactory result. At best, OpenAI has informed you that it cannot give you the correct answer because it does not have access to current data. In the worst case, he gave you an answer that looks correct, but unfortunately is not. It hallucinated. If the answer to your prompt requires current knowledge, access to rapidly changing data or a very precise answer, ChatGPT does not always work.

OpenAI decided to solve this problem by using the power of external developers. They create a platform - place where developers can create plugins that will solve specific user problems. I decided to quickly explore their capabilities and write a simple plugin that will expand ChatGPT with a new skill - understanding timetables and answering the simplest questions, e.g. “What is the next tram line tram number from the stop name stop"?.

How to create a plugin in ChatGPT

Data preparation

Before we start creating a plugin, we must have some data and a web service that will power the OpenAI model. For this purpose, we will have to build a web application that will publish endpoints. They will receive requests from OpenAI and return the necessary data.

For the purposes of creating a plugin, we don't have to deploy anything publicly yet. At this point, OpenAI thought about the experience of plugin developers and made it possible to connect to your service via localhost - the browser will communicate with your local service on your machine.

My service will need a current public transport timetable. Google created the GTFS standard that defines how timetables can be stored. The standard was accepted and is currently used by most public carriers and transport operators.

For this project, I used the data of the transport operator from my city - Wrocław MPK (Poland, Europe).

Web service that will provide chatGPT data

Once I have the data, I need to create the previously mentioned web service that will enable OpenAI to access it. For this purpose, I wrote a simple website in node.js and TypeScript. I also used an SQLite3 database to easily search the data using the SQL syntax.

There is a project that can do exactly what I implemented myself: gtfs. Unfortunately, my local public transport operator published schedule data in the form of GTFS with the "variants" option, which this project does not support. That's why I decided to write it myself.

My web server publishes four endpoints responsible for:

- Downloading “trips” with a given ID

- Download departure times for a given trip and stop

- Search for stops by name

- download routes list (lines)

Bus and tram lines are listed as routes with their short descriptions of connections. Trips contain data on each ride of a given line. Information about what time and at what stop a given line stops as part of a given route is included in the "trips" data set.

In this post I focus on problems specific to OpenAI plugins. To learn more how to quickly create a webserver in node.js, I recommend the Getting started section of the Express.js framework.

The complete code is available here: GitHub. Now that we have a basic web server, we can start creating a plugin.

Description of the service

We need to create a description of our service. OpenAI does not know what structure our API has - what it can do, where it can send queries, what data to provide. For this purpose, we need to create two files - ai-plugin.json and openapi.yaml.

The first contains basic metadata - name, description, authentication method, URLs and email address.

The second file is very crucial - it is a description of your API in OpenAPI format. Such a file documents your service; in our case, it will contain a description of four routers. Each of them has descriptions and detailed information about what data it returns, its type and its structure. We also describe all additional parameters that our endpoints accept.

An example description of the path responsible for finding stops looks like this:

/stops/search/{name}:

get:

operationId: searchStops

summary: Search for stops by name

parameters:

- name: name

in: path

required: true

description: Part of the stop name

schema:

type: string

responses:

'200':

description: Successful operation

content:

application/json:

schema:

type: array

items:

$ref: '#/components/schemas/Stop'

OperationId contains the id of the given path. In summary, we describe what it does. In parameters, we describe the only parameter we accept - a string of characters by which we will search for stops.

We document the answer for code 200, so when everything goes well. We expect our endpoint will return an array of "Stop" objects. As you can see, I referenced an object defined elsewhere (so that it can be reused in this document without duplicating the description).

Our Stop is described as follows:

Stop:

type: object

properties:

stop_id:

type: string

stop_code:

type: string

stop_name:

type: string

stop_lat:

type: number

stop_lon:

type: number

Its parameters are id, code, name and geographical coordinates of the stop location.

Complete OpenAPI description file prepared for this plugin is available here: openapi.yaml

It is worth using the tools validating the correctness of the Open API file. It took me a while to find a simple bug that the VS Code plugin openapi-lint caught.

In addition, our service can provide a logo. It will be used in the OpenAI interface.

We already have a properly prepared service, so now we can check how it works in the ChatGPT interface.

How to access the option to create ChatGPT plugins?

Currently, not everyone can create ChatGPT plugins. To have such an opportunity, you must sign up for the waitlist, fill in basic data, and wait for access. Plugins also work only with the GPT4 model, requiring a paid subscription as a result.

Create a ChatGPT plugin

Once we have the ability to create plugins, we can start working. Launch ChatGPT, switch to the GPT-4 model and choose the “Plugins (beta)” option. Now, go to the plugin store – a modal will appear, in which we select "Develop your own plugin".

Enter the URL of the service - in my case it is http://localhost:4002. OpenAI will automatically download ai-plugin.json and openapi.yaml.

Many developers encounter problems at this stage. The most popular reasons are errors in the openapi.yaml file and the lack of proper CORS support.

To deal with the first one, enabling developer tools is a good idea. Those can indicate where exactly the problem lies and force the re-downloading of the corrected API definitions – an excellent tool that has been surprisingly hidden in the interface. It seems to me that it should have been enabled by default for every developer creating a plugin.

CORS is a mechanism that controls which services browsers can connect to. In short - the server must confirm to the browser that a given website can query it. Added support for this mechanism varies depending on the technology used, and in my case, it was limited to the use of the cors library for the express.js server.

Now that our plugin is live, we can try it out.

Demo time

Go to the chatGPT and choose the GPT-4 model. Make sure you have your plugin selected from the list below. Additionally, make sure that you have dexvtools enabled (you can do it in settings).

It is also worth opening the Network tab in the browser. This will allow you to see requests made by ChatGPT to your server and responses.

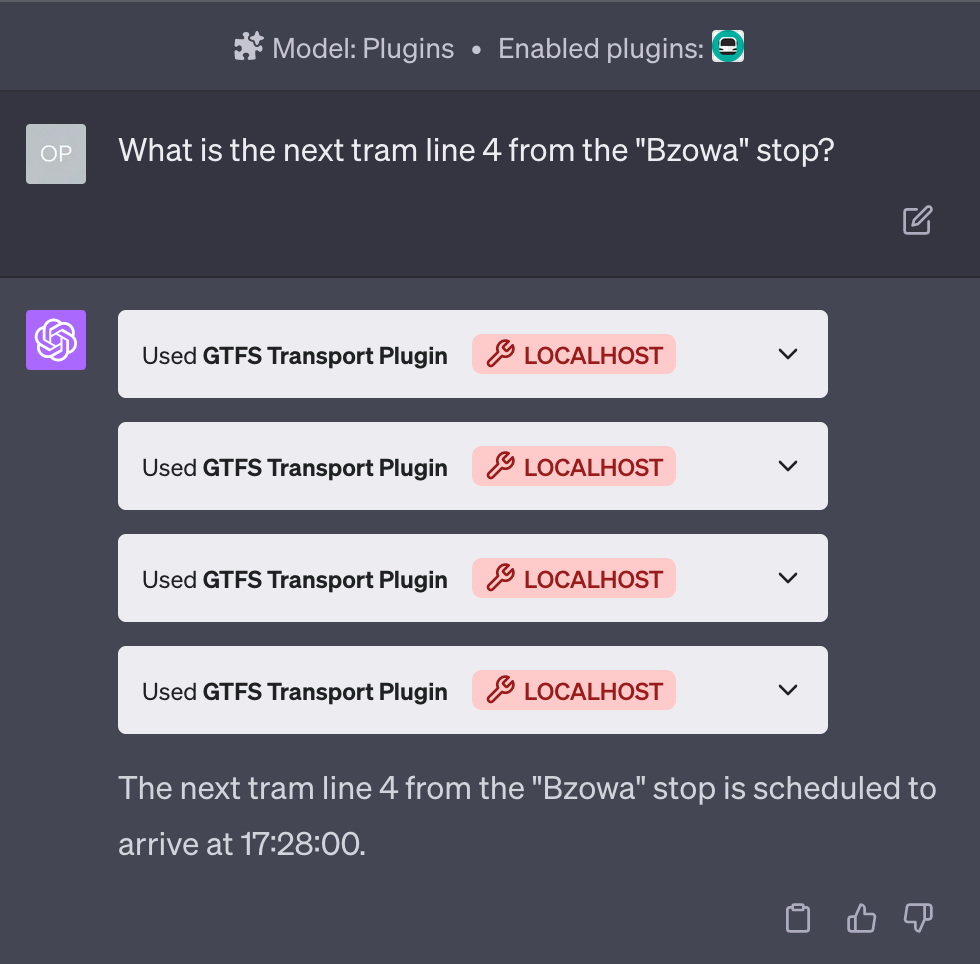

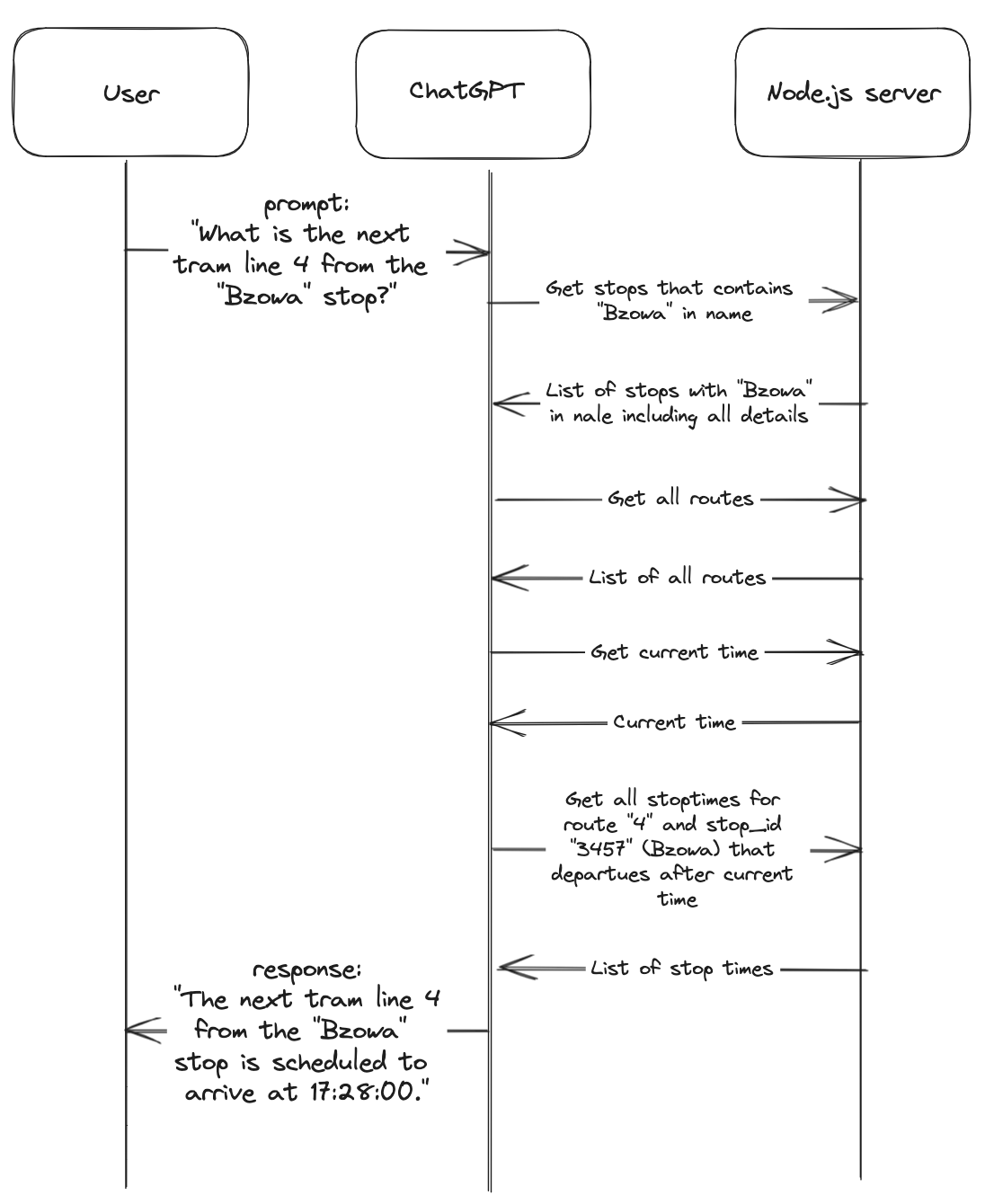

Let's start with prompting OpenAI with the first question. In this case, we want to determine when the nearest tram line 4 from the "Bzowa" stop leaves. We enter the query: “What is the next tram line 4 from the "Bzowa" stop?” and press enter.

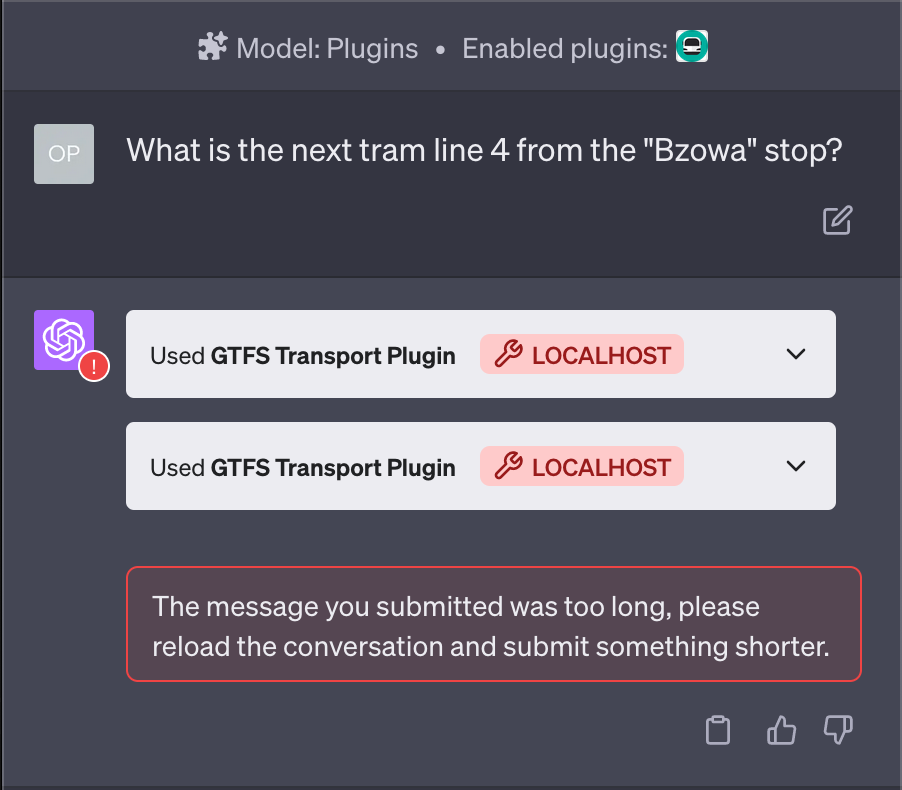

At this stage, it often turns out we do not receive a response, only an error message. It happens because our API returns too much data.

OpenAI cannot process so much content returned by our service, so we receive an error message. It makes sense - for the question about departure times, we returned an object with a hundred results. We return all available data, but in the case of questions about the nearest connections, it is not necessary. We can limit the number of queries returned, e.g. to 10.

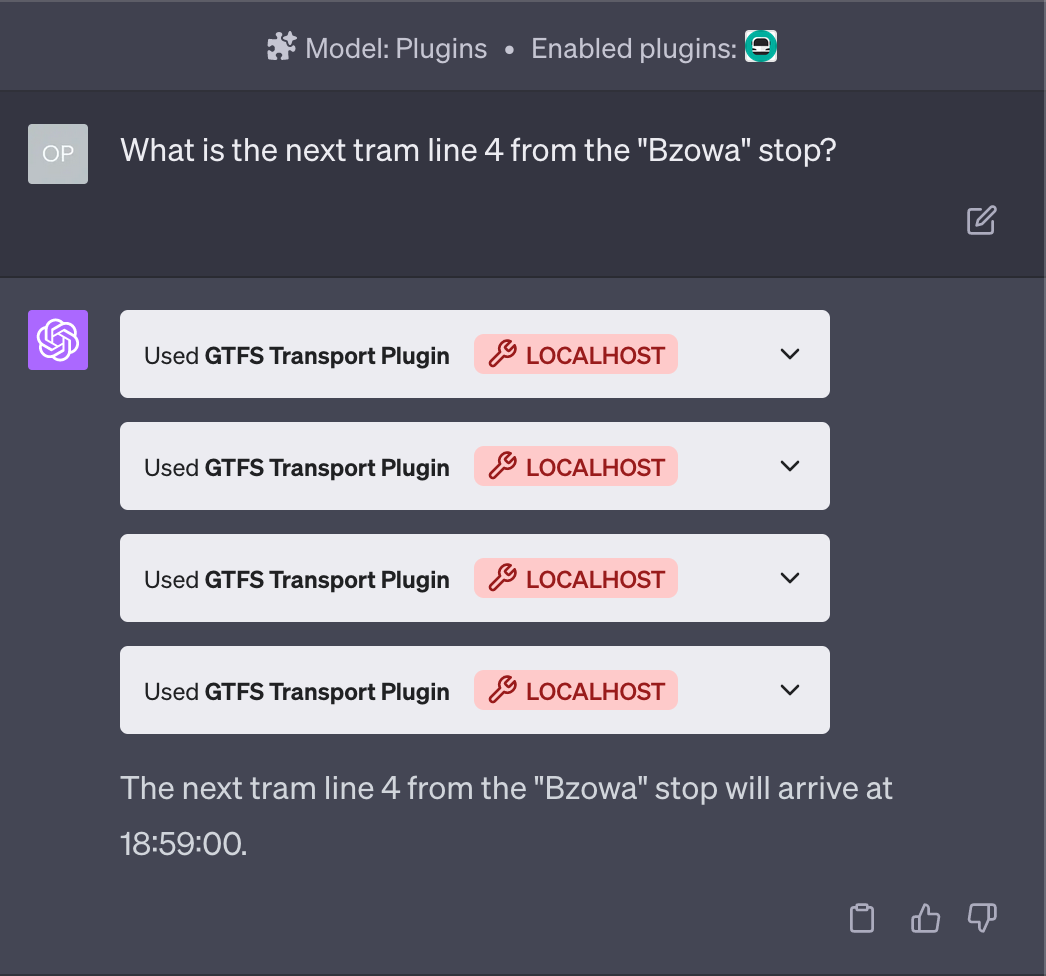

After implementing the return data limit in our web service, we get the answer to our question, but it is wrong. Why, you may ask? Let's assume that when he performs this query, it is noon, but the answer says that it is currently 04:09:00. Unfortunately, OpenAI cannot complete the parameters with the current time, and we have to fix it somehow.

What can we do?

One of the possibilities is to add a parameter that would instruct my server to filter out the results and return the closest ones automatically. This would be the best solution, although I was curious about another approach. What will happen if our server publishes the "get-current-time" endpoint, which will return the current time? Will OpenAI use it and automatically pass it in a parameter of our other method?

I checked it and it worked as expected.

OpenAI fetched the current time and passed it to another method. Mind you that this is not an optimal solution, as it requires an additional request.

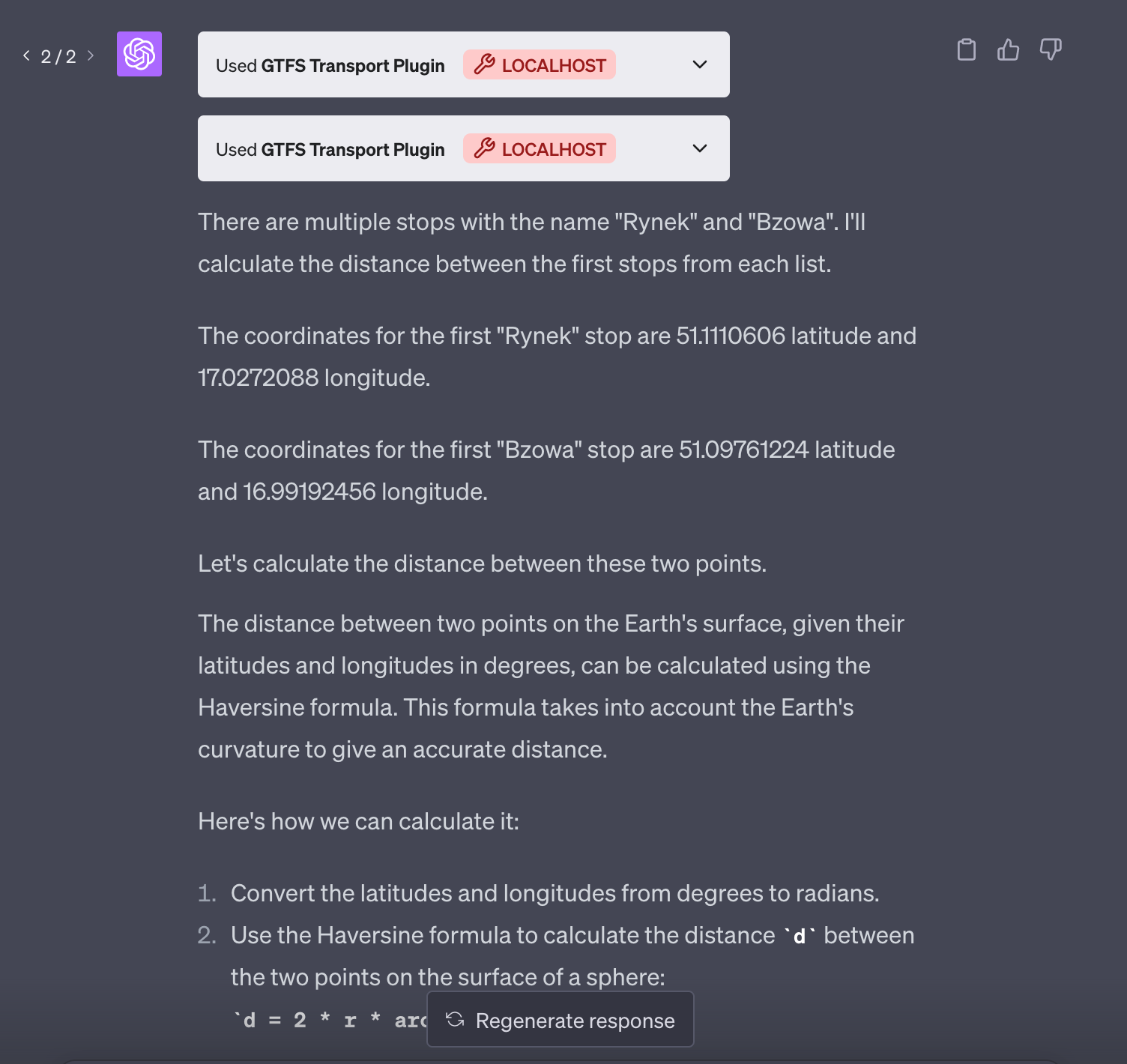

OpenAI can use this information to answer other questions, e.g. about the distance between stops. Well, not quite, but it gives tips on how to calculate it ;)

Summary

My short experiment with OpenAI plugins allowed me to understand how they work and what problems application developers have to face. The available documentation is very basic, and there is a lack of information on how to approach plugin design, such as tips and tricks or solutions to the most common problems. I feel that the problem with returned data that is too big will affect every developer who tries to build a plugin for an already existing service. It seems to me that several proven strategies for these problems can be described and enforced.

For me, building a plugin for OpenAI is a great exercise to make sure we understand the main task of our tool or service. Just like the mobile-first approach known from interface design, we start with mobile because we have the biggest limitations there. We need to know which information and possible interactions are key and most important and which are less important. The OpenAI plugin is another breakpoint of your application, but this time the limitations are much more significant, as you are dealing with a text interface. What is the key value of your service? What customer problems does it solve? What questions will your users ask? How can your service solve them? These are great questions that developers often ask themselves at too late of a stage. Building an interface with a plugin can be a great exercise that will force us to ask ourselves important questions, to which answers may affect how we later design an interface for mobile or desktop devices.

For the whole idea of plugins, however, the biggest challenge is due to OpenAI and distribution, integrating them with ChatGPT. Plugins have been released as a test version and only recently have users access to the search engine or the ability to browse them by category. However, this is only the first step. Will they manage to persuade masses of users to use them? Will users be able to find them on their own and find out about the existence of plugins they are interested in? Will they be suggested or activated automatically? Will they build a business model allowing even more developers to dive into this adventure?

The idea itself looks very promising and has great technical possibilities, but time will tell whether it will be possible to gather a wider range of users using this tool and whether developers of web applications will consider OpenAI as an important distribution channel for their tools.

By the way, I was amazed how much of the plugin code CoPilot and ChatGPT4 wrote for me. I started to wonder for a moment - was I, the plugin developer, really needed?